Global, May 30, 2024

The constant security battle of how Artificial Intelligence is applied

Over recent years, more and more businesses have taken action to develop their capabilities around Artificial Intelligence, especially with Generative AI coming onto the scene as a major tech development last year.

In our Global CIO Report 2024, The Future Face of Tech Leadership, AI was identified as the number one priority for CIOs this year, made clear with these headlines:

- 87% of survey respondents reported they have established working groups dedicated wholly to AI.

- 86% said they are committed to developing stronger AI skills among their employees.

- 85% have earmarked budgets solely for AI development and implementation.

Furthermore, this trend of companies ramping up their AI capabilities was also clearly shown in a recent poll conducted by Gartner, in which over half of their survey respondents reported an increase in generative AI investment in the previous twelve months.

“Organisations are not just talking about generative AI – they’re actually investing time, money and resources to move it forward and drive business outcomes,”

said Frances Karamouzis, Distinguished VP Analyst at Gartner.

“In fact, 55% of organisations reported increasing investment in generative AI since it surged into the public domain ten months ago. Generative AI is now on CEOs’ and boards’ agendas as they seek to take advantage of the transformative inevitability of this technology.”

For example, a beneficial application of AI for security we are seeing already, in these early days, is how it enables security teams to analyse massive datasets of network activity, leverages machine learning to understand abnormal patterns of behaviour, and flags anomalies that could indicate an incoming attack, which may otherwise be missed by humans.

Indeed, Generative AI takes this further by helping to predict and design potential exploits, even for Zero Day vulnerabilities. In addition, AI can automate a range of security responses, such as isolating infected machines or blocking suspicious traffic. This significantly improves the ever-critical Time to Respond, helps to contain attacks earlier, and minimises the damage.

Ultimately, with business outcomes including improved efficiency, better customer experience, and higher productivity, it’s no surprise everyone is leaning into “Good AI” and how it is set to revolutionise many industries.

“AI poses the most significant threat to the future of cyber-security, yet it also represents our greatest defence. How we harness its transformative capability is the responsibility of us all”.

Mike Fry, Security Practice Director, Logicalis

But where there’s Good, there’s Bad.

Despite the fact that organisations have started to increase their knowledge and skills in recent years, threat actors are always looking for new and more malicious applications of AI technology to improve their own efficacy and profitability.

For our Global CIO Report for 2024, Logicalis research found 64% of technology leaders expressing worries about AI threatening their core business propositions, and 72% were apprehensive about the challenges of regulating AI use internally.

What’s more, 83% of CIOs reported their businesses had experienced a cyber-attack over the last year, and over half (57%) said their organisation was still unequipped to sustain another security breach.

And to get on top of what the current threat landscape looks like, tech and security leaders around the world would also do well to read what the National Cyber Security Centre (NCSC) in the UK has to say.

NCSC’s recent report on Artificial Intelligence includes eight key judgements. Here are three to provide a quick snapshot:

- AI provides capability uplift in reconnaissance and social engineering, almost certainly making both more effective, efficient, and harder to detect.

- AI will almost certainly make cyber-attacks... more impactful because threat actors will be able to analyse exfiltrated data faster and more effectively and use it to train AI models.

- Moving towards 2025 and beyond, commoditisation of AI-enabled capability in criminal and commercial markets will almost certainly make improved capability available to cyber-crime and state actors.

As Mike Fry, Security Practice Director at Logicalis comments:

“We all need to recognise that AI is yet another digital capability in the ever-evolving cyber future.

“The real issue is if “Bad AI” moves faster, better, and quicker than current legacy defences, and we fail to embrace AI as defenders. When that happens, the threat actors will nearly always win.

“In other words, if we are not operating with AI-enabled defences to counter AI-enabled attacks, how can we expect to overcome this risk to our businesses? The goal is to fight these advanced attacks with automation tools and facilitate human intervention that detects, interprets, and responds to the threat before it has a chance to make an impact.”

Download your copy of our whitepaper

Re think security in the era of AI

Tracking how businesses can maximise the opportunities and minimise risk

Related Insights

Global , Feb 16, 2026

Why humans are still the weakest link in your organisation's cyber defence

Attackers don’t break in: they log in. Our latest global research from IDC reveals why human identities are the weakest link behind every major ransomware entry point.

Global , Feb 12, 2026

Announcement

Our People: Belong, Grow and Thrive

We know that when individuals from diverse backgrounds come together, innovation flourishes and real value is created – not just for our business, but for the technology sector and the wider world. That’s why we’re committed to building an environment where everyone can belong, grow and thrive.

Global , Feb 11, 2026

Q&A with Mike Fry: Tackling the Cybersecurity Skills Gap

To accompany the release of the recent IDC infographic “From Shortage to Strength: Fixing the Cyber Skills Gap,” we sat down with Mike Fry, to get his insights and what they mean for organisations today.

Global , Feb 4, 2026

Is cybersecurity burnout our biggest looming threat?

As Logicalis launch their latest Cybersecurity Newsletter on LinkedIn, Mike Fry explores why alert fatigue, complexity and human‑centred risks may be today’s biggest threat to resilience.

Global , Jan 12, 2026

From shortage to strength: Fixing the cyber skills gap

Discover why the cybersecurity skills gap is putting businesses at risk and how managed security services can bridge the shortage. Learn strategies to boost resilience, reduce costs, and stay ahead of AI-driven threats.

Global , Dec 10, 2025

Announcement

AI and digital transformation: COP30 signalled a new era for climate action

COP30 in Belém, Brazil marked a turning point for climate action, elevating technology and AI as key drivers of sustainability. Discover how digital innovation, from emissions tracking to AI-powered resilience, is shaping the future of climate strategy and operational excellence.

Global , Dec 8, 2025

What COP30 means for digital transformation and sustainability

COP30 was held in Brazil this year, with much attention focused on adaptation finance and forest protection but for the first time we saw the rise of technology and AI on the agenda.

Global , Dec 4, 2025

Threat Hunters: The front-line defenders in a modern SOC

“Threat hunting isn’t just about locating adversaries; it’s about anticipating their moves, proactively searching for hidden risks, and transforming intelligence into action before a breach occurs, says Gandhiraj Rajappan, SOC Manager at Logicalis Asia Pacific.

Global , Nov 19, 2025

Why APAC is the prime target for cybercriminals

The Asia-Pacific region has become a global hotspot for cybercrime, accounting for 34% of incidents in 2024. As a critical component of the global supply chain and its position as a technology and manufacturing hub, the region is an irresistible target for cybercriminals.

Global , Oct 30, 2025

The power of Responsible Business

Customers, partners, investors, and employees are all looking for organisations that not only deliver results but do so with integrity, transparency, and a genuine commitment to making a positive impact. This is where a Responsible Business department comes in – and why its role is more critical than ever.

Global , Oct 30, 2025

Press Release

Logicalis invests in and expands Intelligent Security solutions to combat escalating cyber threats

With cyber threats reaching critical levels worldwide, 88% of organisations experienced a cybersecurity incident in the last 12 months, and 43% faced multiple breaches. In response, Logicalis announces strategic investment and an expanded portfolio of Intelligent Security solutions, designed to give organisations proactive protection, continuous visibility, and regulatory confidence in an increasingly complex threat landscape.

Global , Oct 29, 2025

Press Release

Logicalis achieves major sustainability milestones and expands global community impact

A strong focus on people, planet and communities has driven several notable achievements, including carbon neutrality for Scope 1 and 2 emissions, more women in leadership positions and more under-represented groups reached through community STEM education programmes.

Global , Sep 25, 2025

Pushing the boundaries - why diversity is the key to tech's future

The technology sector shapes the world we live in and the future we imagine. VP of Global Alliances, Anita Swann, shares her perspective on the boundaries that the tech industry still has to break and how it can be done.

Global , Sep 24, 2025

Logicalis CEO walking the walk with Sustainability

Logicalis CEO Bob Bailkoski is leading by example on sustainability, helping customers cut carbon and energy costs while driving internal carbon neutrality and community impact.

Global , Jul 29, 2025

The Data Dilemma of Sustainability: Why Measuring Impact Isn’t Just a Numbers Game

Explore how Logicalis tackles sustainability data challenges using IBM Envizi, blending ESG metrics with qualitative insights for meaningful impact.

Global , Jul 22, 2025

In the news, Blog

Simplifying Cybersecurity: A Strategic Imperative for the Digital Age

As cyber threats grow, organisations add more tools—yet this complexity itself has become a major security risk. Artur Martins, CISO Logicalis, highlights how CIOs can develop simpler, modern security strategies

Global , Jul 22, 2025

In the news, Blog

Untangling complex cybersecurity stacks in a supercharged risk environment

Artur Martins, Logicalis CISO discusses the growing complexity of cybersecurity stacks in the face of escalating cyber threats.

Global , Jul 22, 2025

In the news, Blog

The Engine for Business Growth: Embracing Innovation and Technology

Logicalis CEO, Bob Bailkoski unpacks the key insights from this year’s CIO Report, offering actionable strategies for success.

Global , Jul 7, 2025

Balancing AI growth with carbon reduction goals

Artificial intelligence has moved from the fringes of operational considerations to the forefront of operations. As organisations race to implement AI innovations, we all face a critical question, how do we reconcile the energy-intensive nature of AI with existing sustainability commitments?

Global , Jun 25, 2025

Blog, Videos

Logicalis reaches carbon neutrality: Scope 1 & 2 emissions milestone achieved

Watch as Bob Bailkoski discusses how Logicalis has achieved carbon neutrality for its Scope One and Scope Two emissions, reaching its 2025 target.

Global , Jun 19, 2025

From carbon neutral to net-zero: Why milestones matter on the road to sustainability

At Logicalis sustainability isn’t a secondary activity. It’s embedded in our DNA. As we mark our achievement of carbon neutrality for Scope 1 and 2 emissions, it’s a moment to reflect on not only how far we’ve come, but also on the road ahead.

Global , Jun 12, 2025

Inside the World of Managed Security Services: An Interview with James Hampson

To uncover the inner workings of cybersecurity and the services offered by Logicalis, we spoke with James Hampson, Managed Security Services Director. His insights into the Security Operations Center (SOC) reveal the complexities, challenges, and triumphs of safeguarding customer environments.

Global , May 29, 2025

Why circular IT is a strategic imperative for CIOs in 2025

The convergence of sustainability and profitability is reshaping how CIOs approach technology strategy. Circular IT is no longer just a green initiative – it’s a strategic imperative. By extending the lifecycle of IT assets, reducing e-waste, and leveraging vendor takeback programs, CIOs can transform their departments into sustainability powerhouses while unlocking new economic value.

Global , May 27, 2025

Blog, Videos

Logicalis celebrates World Cultural Diversity Day

In celebration of World Cultural Diversity Day, we’re honouring the unique stories and perspectives that strengthen our team. When diverse voices unite, creativity thrives and innovation follows. Watch now ...

Global , May 27, 2025

The Value of Human Teams in a SOC: Enhancing Security Operations

Why technology alone isn't enough to safeguard your organisation - the importance of the human element in a security operations centre (SOC) and why it can't be underestimated. Article by Artur Martins, CSIO Logicalis Portugal.

Global , May 20, 2025

Tackling the tech gender gap

The gender gap in tech remains a persistent issue and without action, disparities in representation and pay will continue. Dina Knight joins HR Grapevine's podcast to discuss how Logicalis is committed to change—challenging bias and building an inclusive, empowering environment for women in tech.

Global , May 16, 2025

How Managed XDR provides CIOs with confidence in their Cybersecurity coverage

Roger Loh, Head of Global Solutions, Logicalis, explores how Managed Extended Detection and Response (MXDR) equips CIOs with the visibility, control, and expert support needed to strengthen cybersecurity posture and reduce risk across the enterprise.

Global , May 13, 2025

AI that works for you in the age of watsonx

IBM’s watsonx platform helps organisations strike the right balance between building custom solutions and adopting ready-made AI to drive real value. Scott Hodges explores the platform’s capabilities and uncovers how it can accelerate innovation across your business.

Global , May 13, 2025

From Smart Factories to Smart Ports: The Rise of Private 5G

Private 5G is redefining enterprise connectivity. While initial costs may be higher than Wi-Fi, the long-term gains in automation, efficiency, and ROI make it a smart investment for future-ready businesses.

Global , Apr 29, 2025

Blog, Videos

Shaping the future women in tech

Logicalis' VP of Global Alliances, Anita Swann, shares her perspectives on the future of tech and the importance of encouraging more people to enter the industry.

Global , Apr 29, 2025

Leadership: A journey of learning and responsibility

Leadership is often perceived as guiding a team toward success, making critical decisions, and ensuring smooth operations. However, in my experience, leadership is much more than that. It’s about learning, adapting, and taking responsibility—not just for yourself but for the team as a whole.

Global , Apr 23, 2025

The triple bottom line: People, planet and profit

Leading the charge in sustainability not only benefits the planet but also affects people and profits, aligning with the concept of the triple bottom line. This mindset is not exclusive to IT; anyone, from plumbers to software developers, can adopt a more sustainable mindset to minimise environmental impact.

Global , Apr 23, 2025

Shaping a more inclusive future

Technology is a cornerstone of the modern world, yet the industry remains dominated by men, with women only making up a fraction of overall leadership positions. The question is, how can technology truly serve everyone if half the population it serves are underrepresented in shaping its future?

Global , Apr 10, 2025

Developing pragmatic and profitable partnerships

Technology partnerships are essential in this evolving landscape. While many CIOs believe vendors understand their business needs, 59% find vendor solutions are too complex to manage effectively.

Global , Apr 10, 2025

Avoiding the security spending black hole

The Logicalis Global CIO Report 2025 uncovers a paradox that increased spending has not reduced the frequency of security breaches.

Global , Apr 10, 2025

Innovation with intent: The CIO's mandate to unlock growth through technology

Emerging technologies such as AI, machine learning and IoT are becoming central to business transformation. The Logicalis CIO Report 2025 reveals a growing emphasis on demonstrating tangible business impact.

Global , Apr 9, 2025

ESG meets ROI: Technology's dual dividend

The increasing intersection of environmental sustainability and business performance has led to 91% of organisations surveyd in the Logicalis CIO Report 2025 reporting that they have experienced financial benefits form adopting environmental technologies.

Global , Mar 17, 2025

Blog, Videos

Celebrating International Women's Day 2025

To celebrate IWD 2025, we hosted a webinar with senior leaders across Logicalis to discuss rights, equality and empowerment for women.

Global , Feb 7, 2025

Introducing Logicalis’ Interim Responsible Business Leader

Logicalis has a new Interim Responsible Business leader, Nick Zinzan. Read this Q&A session to understand more about his background and his key focus for Logicalis' key sustainability goals for 2025.

Global , Jan 14, 2025

Architecting change: Gender bias and broader DEI challenges

In this article, Dina Knight, CPO Logicalis shares her personal and professional experience with gender bias, wider DEI industry challenges, and practical examples of how to architect change towards true inclusivity.

Global , Dec 6, 2024

International Workforces: the balance of global policies and local relevance

Speaking to HR World, our CPO Dina Knight shares valuable insights about successfully managing and nurturing diverse multicultural workforces.

Global , Nov 28, 2024

Future Face of Tech Leadership: Mastering the ‘Trifecta of Disruption’

"Disruption is redefining tech leadership, with regulation emerging as a critical new force," according to Logicalis CEO Robert Bailkoski.

Global , Nov 5, 2024

In the news, Blog

Logicalis renews Microsoft Global Azure Expert MSP status

Logicalis are proud to announce they have renewed the prestigious Global Azure Expert Managed Service Provider (AEMSP) certification, validating the highest possible standard of managed cloud services.

Global , Sep 30, 2024

Interview series - Part 2: APAC CEO Chong-Win Lee shares regional trends from the 2024 Logicalis CIO Report

Antoinette Georgopoulos, Content and Communications Manager at Logicalis Australia, continues her interview with Chong-Win Lee (Win), CEO of Logicalis Asia Pacific.

Global , Aug 28, 2024

Blog

Circular logic: Why IT leaders need to embrace circularity for sustainable IT

Discover how embracing Circular IT can transform your IT department into a sustainability powerhouse. This article explores the top 5 ways IT leaders can deliver on sustainability goals.

Global , Aug 8, 2024

Blog

Logicalis scales to new heights with Microsoft in FY24

With awards season behind us and an outstanding year of growth, customer outcomes and new capabilities, Logicalis, has received significant recognition for our outstanding partnership with Microsoft in FY24.

Global , Aug 6, 2024

In the news, Blog

Tech polluting as much as airlines. Why crystal-clear ambition is needed

CIOs are no longer peering into the sustainability debate from the outskirts. They are at the centre of it, driving conversations and influencing future decision-making.

Global , Jul 31, 2024

In the news, Blog

Women in tech: Finding an employer that will support career growth

Dina Knight, CPO at Logicalis shares her insights on what women should look out for when considering organisations that will support their career growth.

Global , Jul 31, 2024

Blog

How can C-level leaders unlock enterprise value through Sustainability?

Read how CEOs and other top executives can champion sustainable practices, to inspire the entire company to prioritise responsibility and innovation. Join our LinkedIn live - Increasing Enterprise Value through Sustainability - on Wednesday 11th September 2024.

Global , Jul 31, 2024

APAC CEO Chong-Win Lee shares regional trends from the 2024 Logicalis CIO Report

Antoinette Georgopoulos, Content and Communications Manager at Logicalis Australia, caught up with Win to discuss his thoughts on the Asia Pacific trends and results from the Logicalis Global CIO Report for 2024.

Global , Jun 14, 2024

Unlocking the future of mining: Logicalis at the Future of Mining Perth 2024

We’re thrilled to announce that Logicalis Australia will be attending the Future of Mining Conference 2024 in Perth for the very first time!

Global , May 30, 2024

How Logicalis is strengthening cybersecurity for a secure future

Discover how Logicalis' Intelligent Security solution leverages advanced technologies and proactive strategies to provide comprehensive threat protection, detection, and response capabilities for your business. Stay ahead of the ever-growing cybersecurity threat landscape.

Global , May 30, 2024

Join Us at EXPONOR: Unlocking the Future of Mining

As part of our Logicalis Mining World Tour, we are thrilled to announce our participation at EXPONOR, an exhibition held in Antofagasta, Chile, to assist our mining customers in solving their most complex challenges.

Global , May 10, 2024

Re think Security

Discover how an intelligent approach to security and leveraging AI can protect your workplace, connectivity, and cloud infrastructure.

Global , May 7, 2024

My life building bridges (and opportunities) in tech

Anita Swann, Group Alliance Director, Logicalis offers her advice as to what do we need to grow the tech industry of the past into the tech industry of the future.

Global , Apr 12, 2024

A decade of insight reveals the future of tech leadership in the Logicalis Global CIO Report 2024

It’s been ten years since the Logicalis CIO report was launched. It was designed as a pulse check on the mood in the industry, to identify common CIO challenges and ambitions and to serve as a reminder that we are part of an ever-changing ecosystem that evolves each year.

Global , Jan 31, 2024

The remarkable impact of breathwork on wellbeing

The next session in our Revive and Thrive series focuses on the damage done by stress and how we can alleviate not just stress, but also build abilities and skills that support our overall wellbeing.

Global , Dec 11, 2023

Progressing your responsible business journey

Our responsible business agenda has been shaped by understanding who we are as a business, which social and environmental challenges are important to our customers, partners, people and in the regions that we operate in.

Global , Dec 11, 2023

Inclusion starts with me

Investigating making the invisible visible, the first session in our Revive and Thrive series provided an understanding of how we can look after each other's mental health by changing behaviours, from unconscious bias to consciously include people.

Global , Nov 23, 2023

How technology is changing environmental sustainability

The enablement of sustainability strategies through technology and IT, in this instance is what can truly enable business growth and ESG performance, and there are several ways that they tie together.

Global , Nov 15, 2023

The ethical implications of lacking sustainable business practices

A sustainable society is one that lives within the carrying capacity of its natural and social systems, but as the impact of businesses on the environment has increased dramatically, that balance for a sustainable society has tipped.

Global , Oct 26, 2023

The evolution of ESG: from corporate 'nice-to-have' to corporate necessity

Corporate sustainability has become more than a “nice to have”. We are seeing more and more organisations realise the urgency to act not just because of regulatory pressure, but also because they see sustainability as a way to drive business benefits. Environmental and social governance or ESG which considers sustainability policies and ethical practice, is moving out of the shadows and becoming the next big bet in business transformation providing clear shared value.

Global , Oct 18, 2023

Choosing the right MSSP -Top 5 credentials to look for when selecting MSSP

Our recent CIO survey shows over half of respondents plan to increase their risk management investment. They also consider malware and ransomware significant risks that their organisations will face in the coming year. But what should an organisation look for in choosing the right MSSP?

Global , Oct 16, 2023

Forward Faster: Accelerating SDGs and Sustainable Leadership

While we are working hard on our robust responsible business plan, especially with regards to our sustainability goals, there is still a long way for us all to go. Responsible Business Manager Sharon Kekana, recently participated in the UN Global Compact Leaders Summit, held at the Javits Centre in New York.

Global , Oct 16, 2023

In the news, Blog

5G and AI: drivers of reindustrialisation in developed countries

Emerging technologies, such as 5G, IoT and Artificial Intelligence (AI), have the potential to promote profound transformations in the way global industry is organized.

Global , Oct 4, 2023

Sustainable travel – a realistic goal in the IT industry?

Catriona Walkerden, Global VP, Marketing takes us through how the new sustainable travel policy at Logicalis influenced her recent business travel decisions. See how she got on ...

Global , Sep 22, 2023

Rethink SASE and SD-WAN with Roger Loh

Roger Loh, Head of Solutions, Digital Transformation, Logicalis Asia, looks at how the adoption of SD-WAN is driving benefits for Logicalis's customers in Asia.

Global , Sep 22, 2023

Rethink Intelligent Connectivity with Vivian Heinrichs

Intelligent Connectivity from Logicalis delivers next generation connectivity to sustain, secure and scale your business.

Global , Sep 22, 2023

Rethink Connectivity at scale with Logicalis CTO Toby Alcock

As leaders how can you scale your connectivity in response to the fast-paced shifts in the economic landscape?

Global , Sep 22, 2023

Rethink the Connected Employee Experience with Logicalis CTO Toby Alcock

Hybrid working is a trend that’s set to stay. So how can you seamlessly deliver the same level of in the office connectivity to your employees no matter when, where or how they choose to work?

Global , Sep 22, 2023

Rethink Connectivity challenges with Rob Price, BU Director for Networking and Connectivity UKI

A key factor driving the evolution of how we live, work and trade is connectivity. So, what are they key trends top of mind for our customers as they navigate this ever-evolving world?

Global , Sep 22, 2023

Rethink Scalable Connectivity with Logicalis CTO Toby Alcock

As leaders how can you scale your connectivity in response to the fast-paced shifts in the economic landscape?

Global , Sep 22, 2023

Rethink Sustainable Connectivity with Logicalis CTO Toby Alcock

Sustainability is the challenge of our age, and CIOs are taking more and more responsibility in ensuring their organisations hit their green targets. So how is connectivity facilitating more sustainable business practices?

Global , Sep 22, 2023

Rethink Secure Connectivity with Logicalis CTO Toby Alcock

As leaders how can you secure your connectivity for an evolving world?

Global , Sep 22, 2023

Rethink Intelligent Connectivity with Logicalis CTO Toby Alcock

Intelligent Connectivity from Logicalis delivers next generation connectivity to sustain, secure and scale your business.

Global , Sep 18, 2023

The role of technology in achieving sustainability goals

Technology has undoubtedly revolutionised the world, however, technology and sustainable approaches are not mutually exclusive. There is a duality to technology and sustainability; while technology itself must be made more sustainable, the way we use technology may be the key to acting more sustainably and tackling the climate crisis.

Global , Sep 4, 2023

The power of next generation connectivity

Business leaders need to create environments that can adapt to maximise opportunities, mitigate risks and most importantly be able to scale both securely and sustainably. The key to this is connectivity.

Global , Aug 4, 2023

Logicalis celebrates incredible growth of Microsoft performance

FY23 has been an extraordinary year for Logicalis’s Microsoft partnership. We’ve seen 60% year-on-year growth reflecting our unwavering commitment to helping customers deliver business value through digital transformation.

Global , Jul 14, 2023

Is sustainability becoming the mandatory brand value?

Employees and customers are looking to organisations for proactive sustainability action to help tackle the worsening climate situation. How can marketing help drive the right behaviour in organisations?

Global , Jul 10, 2023

Employee burnout: Addressing the root causes and empowering leaders to combat it

Employee burnout has become an increasingly alarming concern in recent years and it's now a necessity for modern businesses to empower leaders to proactively address burnout, creating a workplace that promotes employee well-being and productivity.

Global , Jun 2, 2023

Do managed service providers hold the answers to a sustainable future in IT?

With sustainability top of mind for everyone, organisations are demanding transparency and clarity from suppliers and partners around ESG goals today.

Global , May 12, 2023

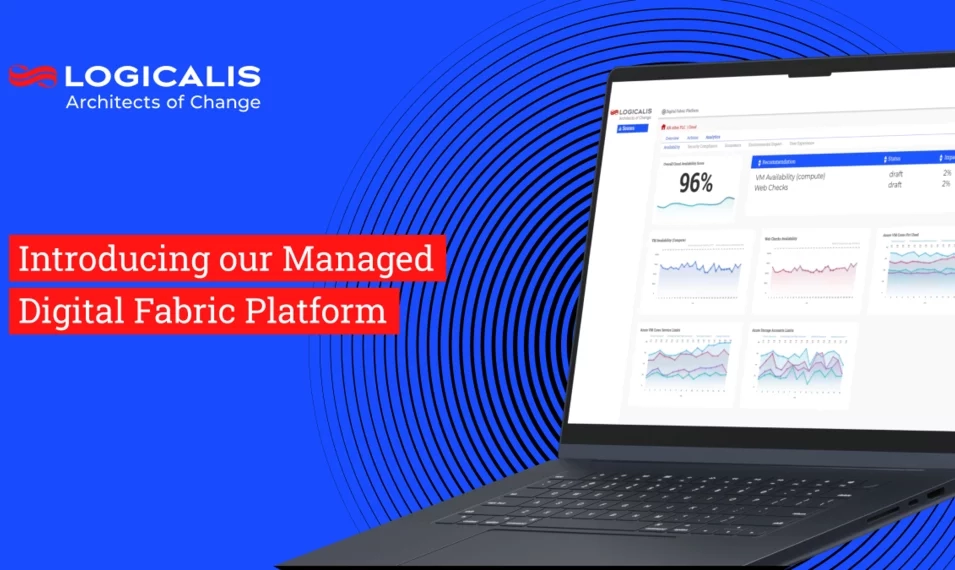

Introducing our Managed Digital Fabric Platform

In response to the need to support our customers and focus on critical activities that matter, such as optimising resources and reducing costs, in 2022, we developed the Managed Digital Fabric platform. Based on machine learning and AI, The Managed Digital Fabric Platform provides our managed services customers with a real-time view of their digital infrastructure across cloud, security, workplace, and connectivity.

Global , May 9, 2023

Superhuman scale: What's possible with the next generation of digital managed services?

The era of digital managed services is upon us, offering superhuman scale for an organisation’s technology ecosystem. What can you expect from this new generation of digital MSPs? Markus Erb, VP of Services for Logicalis, shares his insights on the possible.

Global , Apr 6, 2023

Re think: Economic Resilience

Read our whitepaper, Economic Resilience, to help navigate economic unpredictability and face the new normal 'permacrisis'.

Global , Apr 3, 2023

How CIOs are building resilience with digital managed services

This blog explores how CIOs are building resilience with digital managed services.

Global , Apr 3, 2023

Three ways CIOs are driving the transition to net zero

The transition to net zero offers a great opportunity for CIOs to increase their strategic influence across the business. The Logicalis Global CIO Report 2023 identified three ways CIOs are driving the transition to net zero.

Global , Feb 1, 2023

Driving sustainability as Architects of Change

Being a responsible business is about the ability to make a commitment and make a difference. Charissa Jaganath discusses what Logicalis is doing to make a difference and why driving sustainability is so important to us.

Global , Jan 23, 2023

Logicalis CTO Toby Alcock highlights enterprise tech trends for 2023

Digital innovation, cybersecurity, workplace talent, sustainability, and recession-proofing top the list At Logicalis, as we look forward to yet another busy and challenging year for the IT industry, we've put together the strategic technology trends for 2023 that can help IT leaders see through the current economic and market challenges and deliver sustainable business outcomes that matter.

Global , Nov 22, 2022

How Digital Managed Services are driving business value for CIOs

Today’s CIOs face key challenges around futureproofing, innovating, and the perennial skills shortage. As businesses increasingly adopt a digital-first approach, CIOs need to deliver solutions and services that are future-ready, to mitigate the risks of these challenges.

Global , Nov 16, 2022

Logicalis's Intelligent Connectivity solution now Powered by Cisco

Logicalis's Cisco Powered Intelligent Connectivity delivers SD-WAN and SASE offerings in a managed capacity, providing real-time insights into network performance. Find out more.

Global , Oct 11, 2022

Confessions of a CEO: In a climate conscious world, does the train beat the plane?

More and more climate-conscious travellers are opting to take the train instead of flying. With this in mind, and a business meeting coming up in Cologne, Bob decided to take the 580km trip by train. Here's how he got on

Global , Sep 28, 2022

Logicalis welcomes the overhaul of the Microsoft partner eco-system

Read how Logicalis has worked really hard, not only to be aligned with Microsoft Cloud, but also with Microsoft’s focus sectors. We have deep expertise in retail, health and financial services, and can leverage the full potential of Microsoft Cloud in every sector.

Global , Jul 9, 2022

Strong digital foundations are the key to enterprise agility

Safeguard your employees and data with a secure IT foundation. Learn how managed cloud services can protect staff, productivity, and morale in today's hybrid and remote work environment.

Global , Jun 16, 2022

Do CIOs hold the key to unlocking sustainable innovation?

Businesses need robust plans to lower emissions and reduce their impact on the environment. Businesses not only require sustainable innovation but efficient innovation through taking a data-driven approach and managing IT to outcomes, not uptime.

Global , May 11, 2022

Secrets from future facing CISOs for long-term success

Discover strategies for maintaining security and driving innovation in the modern marketplace. Learn how to balance risk and ensure long-term success in data security and risk management.

Global , Apr 21, 2022

Rethink connectivity to create productivity and scalability

Discover how businesses can optimise processes, reduce costs, and deliver new products at scale through the power of IoT connectivity.

Global , Apr 19, 2022

Logicalis reflect on three-year journey of innovation and growth with Microsoft

Discover how Logicalis partners with Microsoft to provide the best hybrid cloud solutions and managed services. Learn about our advanced specialisations and industry-leading solutions.

Global , Mar 31, 2022

Allow employees to collaborate freely

Boost productivity and foster effective communication with employee collaboration tools. Find out how to support your dispersed workforce and drive business success.

Global , Mar 24, 2022

CIO priorities: Business continuity, resilience and mitigating risk

With the right strategic approach, companies can combine security, resilience, and innovation, to create a clear competitive advantage, but businesses must act now to capitalise on the tools and skills available to them

Global , Mar 22, 2022

A new generation of workers demand a new approach to the workplace

The rise of remote working and a younger digitally native workforce, is shaking up traditional business structures. Many organisations are still adapting their workspaces to cope and in some instances need to overhaul their approach to work entirely.

Global , Mar 15, 2022

Diversity as a key differentiator in transformation

Discover how embracing equality, diversity, and inclusion can drive business success. Learn why diverse and inclusive workplaces are essential in today's competitive landscape.

Global , Mar 9, 2022

Actionable insights are the missing link to collaboration in the hybrid workplace

Discover how to overcome barriers in the hybrid workplace and promote collaboration for increased innovation. Learn how data and insights can drive better employee communication and results

Global , Feb 16, 2022

Rethink the modern workspace to empower employees

Discover how senior leaders can create a balance between business needs and employee preferences to cultivate a productive hybrid office environment.

Global , Feb 9, 2022

Is effective collaboration no longer bound by time or place?

Discover how to empower your employees and optimize collaboration in the digital workplace for enhanced productivity and success.

Global , Feb 1, 2022

Business trend predictions for 2022

Logicalis Group CEO Bob Bailkoski, sat down with us and talked us through his top three predictions for the year ahead. Find out what they are ...

Global , Jan 26, 2022

Creating a culture of innovation and the digital workplace

Alongside the innovation within company culture, CIOs must ensure their employees are satisfied as the hybrid workplace takes shape.

Global , Jan 13, 2022

Bringing together an intergenerational workforce for the future

To attract and retain talent, leaders need to take the time to understand what younger generations really want from their work environment and consider how to empower an increasingly divided intergenerational workforce.

Global , Dec 7, 2021

Rethinking the way we work

Logicalis helps businesses rethink their approach to digital transformation strategy to take a hybrid approach with agility, scalability, and innovation at the core, building resilient businesses for the future.

Global , Nov 29, 2021

Unlocking data to drive business strategy and accelerate growth

The need for digital transformation is more prevalent than ever with the pandemic triggering companies to shift and realign their priorities. Results from this year’s survey identified that three quarters of respondents admit their organisations are wrestling with their abilities to unlock data

Global , Nov 16, 2021

Logicalis recognised with 18 awards at the Cisco Partner Summit 2021

One of only five Cisco Global Gold Certified Partners, Logicalis has worked in partnership with Cisco for over 25 years to combine global expertise with intelligent cutting-edge solutions that deliver business success through digital transformation

Global , Sep 28, 2021

The Changing Role of the CIO - Emerging Agents of Change

Strong customer relationships have become a critical business priority according to the results of the first report in a four-part series following the eighth annual Logicalis Global CIO Survey.

Global , Sep 21, 2021

Want return on digital investments? Look to a managed service provider

Today every business, regardless of industry, is operating in a hyper-competitive environment with everyone battling for their place in the digital economy

Global , Sep 14, 2021

The holy trinity in effective digital workplaces? Cloud, data, and security

Discover the key elements of a successful digital workplace strategy and how enlisting the help of an expert partner can ensure continued success. Unlock the full potential of your organisation with a holistic approach to digital workplace strategy.

Global , Sep 7, 2021

Can a lifecycle approach to cloud adoption improve success?

Cloud migration offers a range of benefits from security to scalability. Successful and optimised cloud migration, especially in the post-pandemic digital economy, will be critical for achieving real-time performance and efficiency.

Global , Aug 31, 2021

Truly successful digital transformation includes employee experience from the start

Discover how a human-centric approach to digital transformation can enable business growth and optimize employee performance. Learn how to tackle the challenges and empower your workforce with the right digital tools.

Global , Aug 26, 2021

The connected workplace is not just for offices

Discover how WiFi 6 and 5G are revolutionizing industries. Together Cisco and Logicalis bring unrivaled expertise to personalize IoT and 5G solutions for your business.

Global , Aug 24, 2021

5G: The next enterprise revolution

5G is less about high-speed connectivity and more about unleashing data and computing capability. With the ability to power future solutions such as autonomous cars, 5G technology can accelerate the growth of low-cost enabled Internet of Things (IoT) devices and more

Global , Aug 10, 2021

Will digital transformation help you compete in the digital economy?

Discover the power of digital transformation in driving business growth, efficiency, and customer satisfaction. Unlock new possibilities and create a digital-first organization with data at its core

Global , Jul 5, 2021

Enabling collaboration in a digital workplace

It’s pretty clear that digital workplaces are here to stay in one form or another. As a growing number of organisations move to make flexible or remote working part of their permanent policy, there are clear benefits to a digital workplace.

Global , Jun 28, 2021

Preparing for the hybrid workforce of the future

Discover how a collaborative workplace can enhance communication, build company culture, and improve market responsiveness. Partner with experts for the right collaboration tools.

Global , Jun 15, 2021

How the global chip shortage is driving data centre projects to the cloud

Explore how moving to the cloud can offer a cost-effective and flexible solution for managing new projects and workloads during the chip shortage. Learn how cloud managed services can provide ample capacity without the high costs of an on-premises solution.

Global , Apr 28, 2021

Your business may not exist if you are not in the Cloud

Discover how transitioning to the cloud can help your business adapt to changing customer demands and achieve greater agility. Explore the benefits of cloud technology and gain a competitive edge

Global , Apr 5, 2021

Big Data and Cloud: The perfect business partners

Discover how cloud technology and infrastructure advancements enable businesses to unlock the true value of Big Data analysis. Learn how flexible infrastructure and scalable services support variable workloads.

Global , Mar 30, 2021

Run VMware on Azure? Yep, you read it right!

Azure VMware Solution shouldn’t be overlooked as a valid step on a cloud journey. It enables migration to the cloud at an infrastructure level, without disrupting application or operations teams.

Global , Mar 26, 2021

How the pandemic uncovered the need for global solutions with local execution

Successful digital transformation requires the integration of technology into all areas of a business. The aim is to fundamentally change how an organisation operates and delivers for its customers.

Global , Mar 19, 2021

Transforming business models to focus on agility

Achieve true business technology needs with a strategic multinational technology partner. Scale solutions at pace and gain global foresight and overview for your enterprise digital transformation journey

Global , Mar 16, 2021

Logicalis South Africa builds a digital future in local school

Learn how Logicalis South Africa supports education and empowers future Architects of Change through its CSR strategy and initiatives. Find out more about their commitment to the BBBEE Act and investments in education.

Global , Mar 8, 2021

International Women's Day 2021

Our teams around the world are committed to supporting gender equality and challenging gender bias and inequality. To celebrate International Women’s Day 2021, we spoke to a couple of women in leadership from across Logicalis.

Global , Feb 18, 2021

Cloud: Everything to play for, no time to lose

Discover why 83% of global CIOs anticipate increased demand for cloud technology. Gain valuable insights on the impetus for change and the growing enthusiasm for digital transformation.

Global , Feb 15, 2021

How to unlock resources with managed services

Outsourcing the management of IT is a proven way to increase services levels, and in many cases decrease costs, but only if it’s done right. Identifying the right managed services partner is crucial, as well as assessing their services, policies and way they conduct business.

Global , Feb 9, 2021

Supporting local communities across Germany

Discover how Logicalis Germany's local CSR programme is bringing technology, resources, and people together to support community-based initiatives in every region. Learn how our engagement with various charities and initiatives is making a real impact.

Global , Jan 29, 2021

Building a better world for a brighter future

Discover how our initiatives are improving diversity in the tech industry, supporting disadvantaged youth, and empowering adults transitioning into tech careers. Read about how we're making a grassroots impact

Global , Jan 18, 2021

Unlocking the power of Big Data

Intelligent platforms, evolving technology and digital transformation have increased the potential value of data exponentially. However, to extract the greatest value from the raw material that sits beneath the surface, you need to mine, refine and distribute it effectively, whilst keeping it secure.

Global , Jan 12, 2021

Protect your business’ most important asset – its employees

Remote working is here to stay, and employees have learnt how to stay productive and proactive. However, the sudden transition to working from home has not been an easy one.

Global , Sep 16, 2020

Solidarity and technology in difficult times

In this article, Rodrigo focusses on the positives from the COVID-19 pandemic and takes pride in the professionalism and dedication of the various teams within Logicalis.

Global , Sep 16, 2019

Latin America: development, productivity, technology and education

Despite its geographical size and abundance of natural resources, Latin America, is an economically small region yet its GDP corresponds to approximately 7% of the world total, a proportion that has remained relatively stable since the 1970s.

Global , Aug 31, 2017

What is IBM Watson and what can it do?

Discover how IBM Watson, an AI platform for business, combines advanced analytics to provide the ultimate cognitive supercomputer available as a SaaS and APIs for developers.